Idle Words > Talks > What Happens Next Will Amaze You

This is the text version of a talk I gave on September 14, 2015, at the FREMTIDENS INTERNET conference in Copenhagen, Denmark.

|

Good morning! Today's talk is being filmed, recorded and transcribed, and everything I say today will leave an indelible trace online. In other words, it's just a normal day on the Internet. For people like me, who go around giving talks about the dystopian future, it's been an incredible year. Let me review the highlights so far: |

|

|

We learned that AT&T has been cooperating with the NSA for over ten years, voluntarily sharing data far beyond anything that the law required them to give. The infamous dating site for married people Ashley Madison was hacked, revealing personal information and and easily-cracked passwords for millions of users. Some of these users are already the subject of active extortion. Australia passed an incoherent and sweeping data retention law, while the UK is racing to pass a law of its own. The horrible Hacking Team got hacked, giving us a window into a sordid market for vulnerabilities and surveillance technology. The 2014 Sony Pictures hack exposed highly sensitive (and amusing) emails and employee data. And finally, highly sensitive and intrusive security questionnaires for at least 18 million Federal job applicants were stolen from the US Office of Personnel Management. |

|

|

Given this list, let me ask a trick question. What was the most damaging data breach in the last 12 months? The trick answer is: it's likely something we don't even know about. When the Snowden revelations first came to light, it felt like we might be heading towards an Orwellian dystopia. Now we know that the situation is much worse. If you go back and read Orwell, you'll notice that Oceania was actually quite good at data security. Our own Thought Police is a clown car operation with no checks or oversight, no ability to keep the most sensitive information safe, and no one behind the steering wheel. |

|

|

The proximate reasons for the culture of total surveillance are clear. Storage is cheap enough that we can keep everything. Computers are fast enough to examine this information, both in real time and retrospectively. Our daily activities are mediated with software that can easily be configured to record and report everything it sees upstream. But to fix surveillance, we have to address the underlying reasons that it exists. These are no mystery either. State surveillance is driven by fear. |

|

|

And corporate surveillance is driven by money. |

|

|

The two kinds of surveillance are as intimately connected as tango partners. They move in lockstep, eyes rapt, searching each other's souls. The information they collect is complementary. By defending its own worst practices, each side enables the other. Today I want to talk about the corporate side of this partnership. In his excellent book on surveillance, Bruce Schneier has pointed out we would never agree to carry tracking devices and report all our most intimate conversations if the government made us do it. But under such a scheme, we would enjoy more legal protections than we have now. By letting ourselves be tracked voluntarily, we forfeit all protection against how that information is used. Those who control the data gain enormous power over those who don't. The power is not overt, but implicit in the algorithms they write, the queries they run, and the kind of world they feel entitled to build. In this world, privacy becomes a luxury good. Mark Zuckerberg buys the four houses around his house in Palo Alto, to keep hidden what the rest of us must share with him. It used to be celebrities and rich people who were the ones denied a private life, now it's the other way around. Let's talk about how to fix it. |

|

|

There's a wonderful quote from a fellow named Martin McNulty, CEO of an ad company called Forward 3D: “I never thought the politics of privacy would come close to my day-to-day work of advertising. I think there’s a concern that this could get whipped up into a paranoia that could harm the advertising industry,” I am certainly here to whip up paranoia that I hope will harm the advertising industry. But his point is a good one. There's nothing about advertising that is inherently privacy-destroying. It used to be a fairly innocuous business model. The phenomenon whereby ads are tied to the complete invasion of privacy is a recent one. |

|

|

In the beginning, there was advertising. It was a simple trinity of publisher, viewer, and advertiser. Publishers wrote what they wanted and left empty white rectangles for ads to fill. Advertisers bought the right to put things in those rectangles. Viewers occasionally looked at the rectangles by accident and bought the products and services they saw pictured there. Life was simple. There were ad agencies to help match publishers with advertisers, figure out what should go in the rectangles, and attempt to measure how well the ads were working. But this primitive form of advertising remained more art than science. The old chestnut had it that half of advertising money was wasted, but no one could tell which half. |

|

|

When the Internet came, there was much rejoicing. For the first time advertisers could know how many times their ads had been shown, and how many times they were clicked. Clever mechanisms started connecting clicks with sales. Ad networks appeared. Publishers no longer had to sell all those empty rectangles themselves. They could just ask Google to fill them, and AdSense would dynamically match the space to available ads at the moment the page was loaded. Everybody made money. The advertisers looked upon this and saw that it was good. |

|

|

Soon the web was infested with all manner of trackers, beacons, pixels, tracking cookies and bugs. Companies learned to pool their data so they could follow customers across many sites. They created user profiles of everyone using the web. They could predict when a potential customer was going to do something expensive, like have a baby or get married, and tailor ads specifically to them. They learned to notice when people put things in a shopping cart and then failed to buy them, so they could entice them back with special offers. They got better at charging different prices to people based on what they could afford—the dream of every dead-eyed economist since the dawn of the profession. Advertisers were in heaven. No longer was half their budget wasted. Everything was quantified on a nice dashboard. Ubiquitous surveillance and the sales techniques it made possible increased revenue by fifteen, twenty, even thirty percent. | |

|

And then the robots came. |

|

|

Oh, the robots were rudimentary at first. Just little snippets of code that would load a web page and pretend to click ads. Detecting them was a breeze. But there was a lot of money moving into online ads, and robots love money. They learned quickly. Soon they were using real browsers, with javascript turned on, and it was harder to distinguish them from people. They learned to scroll, hover, and move the mouse around just like you and me. |

|

|

Ad networks countered each improvement on the robot side. They learned to look for more and more subtle signals that the ad impression was coming from a human being, in the process creating invasive scripts to burrow into the visitor's browser. Soon the robots were disguising themselves as people. Like a monster in a movie that pulls on a person's face like a mask, the ad-clicking robots learned to wear real people's identities. They would hack into your grandparents' computer and use their browser history, cookies, and all the tracking data that lived on the machine to go out and perpetrate their robotic deeds. With each iteration it got harder to tell the people and the robots apart. |

|

|

The robots were crafty. They would do things like load dozens of ads into one pixel, stacking them up on top of one another. Or else they would run a browser on a hidden desktop with the sound off, viewing video after video, clicking ad after ad. They learned to visit real sites and fill real shopping carts to stimulate the more expensive types of retargeting ads. |

|

|

Today we live in a Blade Runner world, with ad robots posing as people, and Deckard-like figures trying to expose them by digging ever deeper into our browsers, implementing Voight-Kampff machines in Javascript to decide who is human. We're the ones caught in the middle. The ad networks' name for this robotic deception is 'ad fraud' or 'click fraud'. (Advertisers like to use moralizing language when their money starts to flow in the wrong direction. Tricking people into watching ads is good; being tricked into showing ads to automated traffic is evil.) |

|

|

Ad fraud works because the market for ads is so highly automated. Like algorithmic trading, decisions happen in fractions of a second, and matchmaking between publishers and advertisers is outside human control. It's a confusing world of demand side platforms, supply-side platforms, retargeting, pre-targeting, behavioral modeling, real-time bidding, ad exchanges, ad agency trading desks and a thousand other bits of jargon. Because the payment systems are also automated, it's easy to cash out of the game. And that's how the robots thrive. It boils down to this: fake websites serving real ads to fake traffic for real money. And it's costing advertisers a fortune. |

|

|

Just how much money robot traffic absorbs is hard to track. The robots actually notice when they're being monitored and scale down their activity accordingly. Depending on estimates, ad fraud consumes from 10-50% of your ad budget. In some documented cases, over 90% of the ad traffic being monitored was non-human. So those profits to advertisers from mass surveillance—the fifteen to thirty percent boost in sales I mentioned—are an illusion. The gains are lost, like tears in the rain, to automated ad fraud. Advertisers end up right back where they started,still not knowing which half of their advertising budget is being wasted. Except in the process they've destroyed our privacy. |

|

|

The winners in this game are the ones running the casino: big advertising networks, surveillance companies, and the whole brand-new industry known as "adtech". The losers are small publishers and small advertisers. Universal click fraud drives down the value of all advertising, making it harder for niche publishers to make ends meet. And it ensures that any advertiser who doesn't invest heavily in countermeasures and tracking will get eaten alive. But the biggest losers are you and me. Advertising-related surveillance has destroyed our privacy and made the web a much more dangerous place for everyone. The practice of serving unvetted third-party content chosen at the last minute, with no human oversight, creates ideal conditions for malware to spread. The need for robots that can emulate human web users drives a market for hacked home computers. It's no accident how much the ad racket resembles high-frequency trading. A small number of sophisticated players are making a killing at the expense of everybody else. The biggest profits go to the most ruthless, encouraging a race to the bottom. The ad companies' solution to click fraud is to increase tracking. And they're trying to convince browser vendors to play along. If they could get away with it, they would demand that you have webcam turned on, to make sure you are human. And to track your eye movements, and your facial expression, and round and round we go. I don't believe there's a technology bubble, but there is absolutely an advertising bubble. When it bursts, companies are going to be more desperate and will unload all the personal data they have on us to absolutely any willing buyer. And then we'll see if all these dire warnings about the dangers of surveillance were right. |

|

|

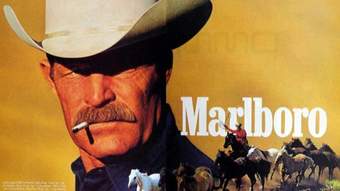

(A PARENTHETICAL COMMENT ABOUT DESPAIR)

It's easy to get really depressed at all this. It's important that we not let ourselves lose heart. If you're over a certain age, you'll remember what it was like when every place in the world was full of cigarette smoke. Airplanes, cafes, trains, private offices, bars, even your doctor's waiting room—it all smelled like an ashtray. Today we live in a world where you can go for weeks without smelling a cigarette if you don't care to. The people in 1973 were no more happy to live in that smoky world than we would be, but changing it seemed unachievable. Big Tobacco was a seemingly invincible opponent. Getting the right to breathe clean air back required a combination of social pressure, legal action, activism, regulation, and patience. |

|

|

It took a long time to establish that environmental smoke exposure was harmful, and even longer to translate this into law and policy. We had to believe in our capacity to make these changes happen for a long time before we could enjoy the results.

I use this analogy because the harmful aspects of surveillance have a long gestation period, just like the harmful effects of smoking, and reformers face the same kind of well-funded resistance. That doesn't mean we can't win. But it does mean we have to fight. |

|

|

Today I'd like to propose some ways we can win back our online privacy. I brought up the smoking example because it shows how a mix of strategies—political, legal, regulatory and cultural—can achieve results that would otherwise seem fantastical. So let's review the strategies, new and old, that are helping us push back against the culture of total surveillance. |

|

Technical Solutions |

As technical people, technical solutions will always come first. They're appealing because we can implement them ourselves, without having to convince a vendor, judge, lawmaker, or voter. Ad BlockingI think of ad blocking like mosquito repellent. If you combine DEET, and long sleeves, and wear a face net, you can almost get full protection. but God forbid you have an inch of skin showing somewhere. Ad blocking has seen a lot of activity. The EFF recently released a tool called Privacy Badger specifically targeted at ad tracking, and not just display. And soon iOS will make it possible for users to install third-party apps that block ads. One issue is that you have to trust the ad blocker. Blocking works well right now because it's not widespread. The technology is likely to become a victim of its own success. And at that point it will face intense pressure to allow ads through. We already see signs of strain with AdBlock Plus and its notion of "acceptable ads". There are more fundamental conflicts of interest, too. Consider the spectacle of the Chrome team, employed by Google, fighting against the YouTube ads team, also employed by Google. Remember that Google makes all its money from ad revenue. I've met people on the Chrome team and they are serious and committed to defending their browser. I've also met people on the YouTube ads team, and they hate their lives and want to die. But it's still a strange situation to find yourself in. As ad blocking becomes widespread, we'll see these tensions get worse, and we'll also see more serious technical countermeasures. Already startups are popping up with the explicit goal of defeating ad blocking. While this exposes the grim, true adversarial nature of online ads, some publishers may feel they have no choice but to declare war on their visitors. Ad blockers help us safeguard an important principle—that the browser is fundamentally our user agent. It's supposed to be on our side. It sticks up for us. We get to control its behavior. No amount of moralizing about our duty to view unwanted advertisements can change that. Consumer countermeasuresA second, newer kind of technical countermeasure uses the tools of surveillance to defeat discriminatory pricing. People have long noticed that they get shown different prices depending on where they visit a site from, or even what day of the week they're shopping. So far this knowledge has been passed around as folklore, but lately we've seen more systematic efforts like $herrif. $herrif collects data from many users in order to show you how the price you're being shown compares to what others have seen for the same product. An obvious next step will be to let users make purchases for one another, as a form of arbitrage. Technical solutions are sweet, but they're also kind of undemocratic. Since they work best when few people are using them, and since the tech elite is the first to adopt them, they can become another way in which the Internet simply benefits the nerd class at the expense of everyone else. |

|

Legal Remedies |

The second layer of attack is through the legal system. In Europe the weapon of choice is privacy law. The EU charter has a lot to say about privacy—it has a lot to say about everything—and individual countries like Germany have even more stringent protections in place. Forcing American companies doing business in the EU to adhere to those principles has been an effective way to rein in their behavior. The biggest flaw in European privacy law is that it exists to safeguard individuals. Its protections are not very effective against mass surveillance, which no one considered a possibility at the time these laws were formulated. One example of EU privacy law in action is the infamous "right to be forgotten", where courts are ordering Google to take down links to information, and even (in an Orwellian twist) demanding the site de-list news stories describing the court order. In the US, the preferred weapon is discrimination law. The Supreme Court has recently ruled that a pattern of discrimination is enough, you don't have to prove intent. That opens the door to lawsuits against sites that discriminate against protected categories of people in practice, whatever the algorithmic reason. A problem with legal remedies is that you're asking people who are technical experts in one field—the law—to create policy in a different technical field, and make sweeping decisions. Those decisions, once made, become binding precedent. This creates another situation where elites end up making rules for everyone else. The right to be forgotten is a good cautionary tale in this respect. No one understands its full implications. A panel of unelected experts ends up making decisions on a case-by-case basis, compounding the feeling of unaccountability. Courts are justifiably loath to make policy. They are not democratic institutions and should not be trying to change the world. By its nature, the legal system always lags behind, waiting until there is a broad social consensus in making decisions. And the litigation itself is expensive and slow. |

|

Laws and Regulations |

There's a myth in Silicon Valley that any attempt to regulate the Internet will destroy it, or that only software developers can understand the industry. When I flew over to give this talk, I wasn't worried about my plane falling out of the sky. Eighty years of effective technical regulation (and massive penalties for fraud) have made commercial aviation the safest form of transportation in the world. Similarly, when I charged my cell phone this morning, I had confidence that it would not burn down my apartment. I have no idea how electricity works, but sound regulation has kept my appliances from electrocuting me my entire life. The Internet is no different. Let's not forget that it was born out of regulation and government funding. Its roots are in military research, publicly funded universities and, for some reason, a particle accelerator. It's not like we're going to trample a delicate flower by suddenly regulating what had once been wild and untamed. That doesn't mean that regulations can't fail. The European cookie law is a beautiful example of regulatory disaster. If I want to visit a site in the EU, I need to click through what's basically a modal dialog that asks me if I want to use cookies. If I don't agree, the site may be borderline unusable. Could I ask you to close your eyes for a minute? Those of you who feel you really understand the privacy implications of cookies, please raise your hands (about ten hands go up in an audience of three hundred). Thank you. You can open your eyes. Those of you who raised your hands, please see me after the talk. I'm a programmer and I wander the earth speaking about this stuff, but I don't feel I fully understand the implications of cookies for privacy. It's a subtle topic. So why is my mom supposed to make a reasoned decision about it every time she wants to check the news or weather? And I say "every time" because the only way European sites remember that you don't want to use cookies is... by setting a cookie. People who are serious about not being tracked end up harassed with every page load. Done poorly, laws introduce confusion and bureaucracy while doing nothing to address the problem. So let's try to imagine some better laws! |

|

Six FixesRight To DownloadRight To DeleteLimits on Behavioral Data CollectionRight to Go OfflineBan on Third-Party AdvertisingPrivacy Promises |

There are a few guiding principles we should follow in any attempt at regulating the Internet. The goal of our laws should be to reduce the number of irrevocable decisions we make, and enforce the same kind of natural forgetfulness that we enjoy offline. The laws should be something average people can understand, setting down principles without tethering them to a specific technical implementation. Laws should apply to how data is stored, not how it's collected. There are a thousand and one ways to collect data, but ultimately you either store it or you don't. Regulating the database makes laws much harder to circumvent. Laws will obviously only apply in one jurisdiction, but they should make services protect all users equally, regardless of their citizenship or physical location. Laws should have teeth. Companies that violate them must face serious civil penalties that bind on the directors of the company, and can't be deflected with legal maneuvering. Anyone who intentionally violates these laws should face criminal charges. With that said, here are six fixes that I think would make the Internet a much better place: |

|

Right To Download |

You should have the right to download data that you have provided, or that has been collected by observing your behavior, in a usable electronic format. The right to download is self-evident, but it has some hidden side effects. It forces companies to better account for the data they collect, instead of indiscriminately spraying it around their datastore. Consider the case of Max Schrems, who sued Facebook to obtain 1200 pages of material on CD, after much stonewalling. Facebook tried to complain that it wasn't technically possible to comply with this request. I'm sure they had to make internal changes as a result. A universal right to download will give users a clear idea of how much information is being collected about their behavior. Study after study shows that people underestimate the degree of surveillance they're under. |

|

Right To Delete |

You should have the right to completely remove your account and all associated personal information from any online service, whenever you want. Programmers recoil from deleting anything, since mistakes can be so dangerous. Many online services (including mine!) practice a kind of 'soft delete', where accounts are flagged but data never gets deleted. Even sites that actually delete accounts still keep backups, sometimes indefinitely, where that data lives on forever. The right to delete would entail stricter limits on how long we keep backups, which is a good thing. Banks and governments have laws about how long they can retain documents. Not having infinite backups might help us take better care of what we do keep. Under this rule, the CEO of Ashley Madison, a website that charged a fee to delete your account, and then did not delete it, would be on trial right now and facing jail time. |

|

Limits On Behavioral Data |

Companies should only be allowed to store behavioral data for 90 days. Behavioral data is any information about yourself you don't explicitly share, but that services can observe about you. This includes your IP address, cell tower, inferred location, recent search queries, the contents of your shopping cart, your pulse rate, facial expression, what pages or ads you clicked, browser version, and on and on. At present, all of this data can be stored indefinitely and sold or given away. Companies should be prohibited from selling or otherwise sharing behavioral data. Limiting behavioral data would constrain a lot of the most invasive behavior while allowing companies to keep building recommendations engines, predictive search, training their speech recognition, their stupid overhyped neural networks—whatever they want. They just don't get to keep it forever. |

|

The Right to Go Offline |

Any device with embedded Internet access should be required to have a physical switch that disconnects the antenna, and be able to function normally with that switch turned off. This means your Internet-connected light bulb, thermostat, mattress cover, e-diaper, fitness tracker, bathroom scale, smart cup, smart plate, smart fork, smart knife, flowerpot, toaster, garage door, baby monitor, yoga mat, sport-utility vehicle, and refrigerator should all be usable in their regular, 'dumb' form. If we're going to have networked devices, we need a foolproof way of disconnecting them. I don't want to have to log in to my pencil sharpener's web management interface to ask it to stop spinning because some teenager in Andorra figured out how to make it spin all night. |

|

|

Samsung recently got in hot water with their smart refrigerator. Because it failed to validate SSL certificates, the fridge would leak your Gmail credentials (used by its little calendar) to anyone who asked it. All I wanted was some ice, and instead my email got hacked. We need an Internet off switch. |

|

Ban on Third-Party Ad Tracking |

Sites serving ads should only be able to target those ads based on two things: - the content of the page itself - information the site has about the visitor Publishers could still use third party ad networks (like AdSense) to serve ads. I'm not a monster. But those third parties would have to be forgetful, using only the criteria the publisher provided to target the ad. They would not be allowed to collect, store, or share any user data on their own. This ban would eliminate much of the advertising ecosystem, which is one of the best things about it. It would especially remove much of the economic incentive for botnets and adware, which piggyback on users histories in an attempt to pass themselves off as 'real' users. Ads would get dumber. Sites would get safer. Your phone would no longer get hot to the touch or take five minutes to render a blog post. Sites with niche content might be able to make a living from advertising again. This proposal sounds drastic, but it really only restores the status quo we had as recently as 2004. The fact that it sounds radical attests to how far we've allowed unconstrained surveillance to take over our online lives. |

|

Privacy Promises |

Unlike the first five fixes, the last fix I propose is not a prohibition or ban. Rather, it's a legal mechanism to let companies to make enforceable promises about their behavior. Take the example of Snapchat, which is supposed to show you photographs for a few seconds, then delete them. It makes that promise in a terms of service document. In reality, Snapchat kept the images on its servers for much longer, along with sensitive user information like location data that it claimed not to collect. And of course, Snapchat got hacked. What were the consequences? The Federal Trade Commission told Snapchat that it would keep a close eye on their privacy practices for the next twenty years, to make sure it doesn't happen again. There was no fine or other sanction. That was it. I want a system where you can make promises that are more painful to break. My inspiration for this is Creative Commons, founded in 2001. Creative Commons gave people legal tools to say things like "you can use this for non-commercial purposes", or "you're free to use this if you give me credit". Similarly, as a website owner, I want a legal mechanism that lets me make binding promises like "I don't store any personally identifying data", or "I will completely delete photos within one minute of you pressing the 'delete' button". A system of enforceable promises would let companies actually compete on privacy. It would make possible innovative ephemeral services that are infeasible now because of privacy concerns. |

|

|

While these sound like ambitious goals, note that their effect is to restore the privacy status quo of the first dot-com boom. As valuable a victory as this would be, it's a somewhat passive and reactive goal. I'd like to spend the last few minutes ranting about ways we could take the debate beyond privacy, and what it would mean for technology to really improve our lives. |

|

|

This summer I took a long train ride from Vienna to Warsaw. It struck me as I looked out window for hour after hour that everything I was seeing—every car, house, road, signpost, even every telegraph pole—had been built after 1990. I'd been visiting Poland regularly since I was a kid, but the magnitude of the country's transformation hadn't really sunk in before. And I felt proud. In spite of all the fraud, misgovernment, incompetence, and general Polishness of the post-communist transition, despite all our hardships and failures, in twenty years we had made the country look like Europe. The material basis of people's lives was incomparably better than it had been before. |

|

|

I'd like to ask, how many of you have been to San Francisco (about a quarter of the audience raises their hands). How many of you were shocked by the homelessness and poverty you saw there? (most of the hands stay up.) For the rest of you, if you visit San Francisco, this is something you're likely to find unsettling. You'll see people living in the streets, many of them mentally ill, yelling and cursing at imaginary foes. You'll find every public space designed to make it difficult and uncomfortable to sit down or sleep, and that people sit down and sleep anyway. You'll see human excrement on the sidewalks, and a homeless encampment across from the city hall. You'll find you can walk for miles and not come across a public toilet or water fountain. If you stay in the city for any length of time, you'll start to notice other things. Lines outside every food pantry and employment office. Racially segregated neighborhoods where poverty gets hidden away, even in the richest parts of Silicon Valley. A city bureaucracy where everything is still done on paper, slowly. A stream of constant petty crime by the destitute. Public schools that no one sends their kids to if they can find an alternative. Fundraisers for notionally public services. You can't even get a decent Internet connection in San Francisco. The tech industry is not responsible for any of these problems. But it's revealing that through forty years of unimaginable growth, and eleven years of the greatest boom times we've ever seen, we've done nothing to fix them. I say without exaggeration that the Loma Prieta earthquake in 1989 did more for San Francisco than Google, Facebook, Twitter, and all the rest of the tech companies that have put down roots in the city since. Despite being at the center of the technology revolution, the Bay Area has somehow failed to capture its benefits. |

|

|

It's not that the city's social problems are invisible to the programming class. But in some way, they're not important enough to bother with. Why solve homelessness in one place when you can solve it everywhere? Why fix anything locally, if the solutions can scale?

And so we end up making life easier for tech workers, assuming that anything we do to free up their time will make them more productive at fixing the world for the rest of humanity. This is trickle-down economics of the worst kind. |

|

|

In the process, we've turned our city into a a residential theme park, with a whole parallel world of services for the rich. There are luxury commuter buses to take us to work, private taxis, valet parking, laundry and housecleaning startups that abstract human servants behind a web interface. We have a service sector that ensures instant delivery of any conceivable consumer good, along with some pretty inconceivable ones. There are no fewer than seven luxury mattress startups. There's a startup to pay your neighbor to watch your packages for you. |

|

|

My favorite is a startup you can pay to move your trash bins, once a week, three meters to the curb. If at the height of boom times we can look around and not address the human crisis of our city, then when are we ever going to do it? And if we're not going to contribute to our own neighborhoods, to making the places we live in and move through every day convenient and comfortable, then what are we going to do for the places we don't ever see? |

|

|

You wouldn't hire someone who couldn't make themselves a sandwich to be the head chef in your restaurant. You wouldn't hire a gardener whose houseplants were all dead. But we expect that people will trust us to reinvent their world with software even though we can't make our own city livable. |

|

|

A few months ago I suggested on Twitter that Denmark take over city government for five years. You have about the same population as the Bay Area. You could use the change of climate. It seemed like a promising idea. I learned something that day: Danes are an incredibly self-critical people. I got a storm of tweets telling me that Denmark is a nightmarish hellscape of misgovernment and bureaucracy. Walking around Copenhagen, I can't really see it, but I have to respect your opinion. So that idea is out. But we have to do something. |

|

|

Our venture capitalists have an easy answer: let the markets do the work. We'll try crazy ideas, most of them will fail, but those few that succeed will eventually change the world. But there's something very fishy about California capitalism. Investing has become the genteel occupation of our gentry, like having a country estate used to be in England. It's a class marker and a socially acceptable way for rich techies to pass their time. Gentlemen investors decide what ideas are worth pursuing, and the people pitching to them tailor their proposals accordingly. The companies that come out of this are no longer pursuing profit, or even revenue. Instead, the measure of their success is valuation—how much money they've convinced people to tell them they're worth. There's an element of fantasy to the whole enterprise that even the tech elite is starting to find unsettling. |

|

|

We had people like this back in Poland, except instead of venture capitalists we called them central planners. They too were in charge of allocating vast amounts of money that didn't belong to them. They too honestly believed they were changing the world, and offered the same kinds of excuses about why our day-to-day life bore no relation to the shiny, beautiful world that was supposed to lie just around the corner. |

|

|

Even those crusty, old-fashioned companies that still believe in profit are not really behaving like capitalists. Microsoft, Cisco and Apple are making a fortune that just sits offshore. Apple alone has nearly $200 billion in cash that is doing nothing . We'd be better off if Apple bought every employee a fur coat and Bentley, or even just burned the money in a bonfire. At least that would create some jobs for money shovelers and security guards. Everywhere I look there is this failure to capture the benefits of technological change. |

|

|

So what kinds of ideas do California central planners think are going to change the world? Well, right now, they want to build space rockets and make themselves immortal. I wish I was kidding. |

|

|

Here is Bill Maris, of Google Ventures. This year alone Bill gets to invest $425 million of Google's money, and his stated goal is to live forever. He's explained that the worst part of being a billionaire is going to the grave with everyone else. “I just hope to live long enough not to die.” I went to school with Bill. He's a nice guy. But making him immortal is not going to make life better for anyone in my city. It will just exacerbate the rent crisis. |

|

|

Here's Elon Musk. In a television interview this week, Musk said: "I'm trying to do useful things." Then he outlined his plan to detonate nuclear weapons on Mars. These people are the face of our industry. |

|

|

Peter Thiel has publicly complained that giving women the vote back in 1920 has made democratic capitalism impossible. He asserts that "the fate of our world may depend on the effort of a single person who builds or propagates the machinery of freedom that makes the world safe for capitalism." I'm so tired of this shit. Aren't you tired of this shit? |

|

|

Maybe the way to fix it is to raise the red flag, and demand that the fruits of the technical revolution be distributed beyond our small community of rich geeks. I'll happily pay the 90% confiscatory tax rate, support fully-funded maternity leave, free hospital care, real workers' rights, whatever it takes. I'll wear the yoke of socialism and the homespun overalls. |

|

|

Or maybe we need to raise the black flag, and refuse to participate in the centralized, American, plutocratic Internet, working instead on an anarchistic alternative. |

|

|

Or how about raising the Danish flag? You have a proud history of hegemony and are probably still very good at conquering. I would urge you to get back in touch with this side of yourselves, climb in the longboats, and impose modern, egalitarian, Scandinavian-style social democracy on the rest of us at the point of a sword. |

|

|

The only thing I don't want to do is to raise the white flag. I refuse to believe that this cramped, stifling, stalkerish vision of the commercial Internet is the best we can do. POLITE Q/A SESSION FOLLOWED BY A TSUNAMI OF APPLAUSE |

Warm thanks to Joshua Schachter, Thomas Ptacek, Cathy O'Neil, and Ethan Zuckerman for helping me wrestle some of these ideas to the ground. Whether or not I was able to pin them is another matter, and not their fault.